Techniques for identifying outliers in exploratory analysis. An example of using STATISTICA cluster analysis in auto insurance. Time series analysis

The book, written in 1977 by a well-known American mathematical statistician, outlines the basics of exploratory data analysis, i.e. primary processing of the results of observations, carried out by means of the simplest means - a pencil, paper and a slide rule. Using numerous examples, the author shows how the presentation of observations in a visual form using diagrams, tables and graphs facilitates the identification of patterns and the selection of methods for deeper statistical processing. The presentation is accompanied by numerous exercises involving rich material from practice. Lively, figurative language facilitates the understanding of the material presented.

John Tukey. Analysis of the results of observations. Exploratory analysis. – M.: Mir, 1981. – 696 p.

Download abstract (summary) in format or , examples in format

At the time of publication of the note, the book can only be found in second-hand bookshops.

The author subdivides statistical analysis into two stages: exploratory and confirmatory. The first stage includes the transformation of observational data and ways to visualize them, allowing you to identify internal patterns that appear in the data. At the second stage, traditional statistical methods for parameter estimation and hypothesis testing are applied. This book is about exploratory data analysis (for confirmatory analysis, see ). Reading the book does not require prior knowledge of probability theory and mathematical statistics.

Note. Baguzin. Given the year the book was written, the author focuses on visualizing data using a pencil, ruler, and paper (sometimes millimeter paper). In my opinion, today the visual representation of data is connected with the PC. Therefore, I tried to combine the original ideas of the author and processing in Excel. My comments are indented.

Chapter 1

A chart is most valuable when it forces us to notice things we didn't expect to see. Representing numbers in the form of a stem and leaves allows you to identify patterns. For example, taking tens as the base of the stem, the number 35 can be attributed to stem 3. The leaf will be equal to 5. For the number 108, the stem is 10, the leaf is 8.

As an example, I took 100 random numbers distributed according to the normal law with a mean of 10 and a standard deviation of 3. To get such numbers, I used the formula =NORM.INV(RAND();10;3) (Fig. 1). Open the attached Excel file. By pressing F9, you will generate a new series of random numbers.

Rice. 1. 100 random numbers

It can be seen that the numbers are mainly distributed in the range from 5 to 16. However, it is difficult to notice any interesting pattern. The stem and leaf plot (Figure 2) reveals a normal distribution. Pairs of neighboring numbers were taken as a trunk, for example, 4-5. The leaves reflect the number of values in that range. In our example, there are 3 such values.

Rice. 2. Graph "stem and leaves"

There are two features in Excel that allow you to quickly explore frequency patterns: the FREQUENCY function (Fig. 3; see for more details) and pivot tables (Fig. 4; see for more details, section Grouping numeric fields).

Rice. 3. Analysis using the FREQUENCY array function

Rice. 4. Analysis using pivot tables

Representation in the form of a stem with leaves (frequency representation) allows you to identify the following features of the data:

- division into groups;

- asymmetrical fall to the ends - one "tail" is longer than the other;

- unexpectedly "popular" and "unpopular" meanings;

- about what value the observations are "centered";

- How big is the scatter in the data.

Chapter 2. SIMPLE DATA SUMMARY - NUMERICAL AND GRAPHIC

The representation of numbers in the form of a stem with leaves allows you to perceive the overall picture of the sample. We are faced with the task of learning how to express in a concise form the most common common features of samples. For this, data summaries are used. However, although summaries can be very helpful, they do not provide all the details of the sample. If there are not so many of these details as to be confusing, it is best to have the full data in front of our eyes, placed in a clearly convenient way for us. For large data sets, summaries are necessary. We do not assume or expect that they will replace the complete data. Of course, it is often the case that adding details does little, but it is important to realize that sometimes details do a lot.

If, to characterize the sample as a whole, we need to select several numbers that are easy to find, then we will probably need:

- extreme values - the largest and smallest, which we will mark with the symbol "1" (according to their rank or depth);

- some middle value.

Median= median value.

For a series represented as a stem with leaves, the middle value can be easily found by counting down from any of the ends, assigning the rank "1" to the extreme value. Thus, each value in the sample gets its own rank. You can start counting from either end. The smallest of the two ranks thus obtained that can be assigned to the same value, we will call depth(Fig. 5). The depth of the extreme value is always 1.

Rice. 5. Determining depth based on two ranking directions

depth (or rank) of the median = (1 + number of values)/2

If we want to add two more numbers to form a 5-number summary, then it is natural to define them by counting up to half the distance from each end to the median. The process of finding the median and then these new values can be thought of as folding a piece of paper. Therefore, it is natural to call these new values folds(now more commonly used term quartile).

When collapsed, a series of 13 values might look like this:

Five numbers to characterize the series in ascending order will be: -3.2; 0.1; 1.5; 3.0; 9.8 - one at each inflection point of the row. The five numbers (extremes, folds, median) that make up a 5-number summary, we will depict in the form of the following simple diagram:

where on the left we have shown the number of numbers (marked with a #), the depth of the median (letter M), the depth of the folds (letter C) and the depth of extreme values (always 1, no need to mark anything else).

On fig. 8 shows how to display a 5-digit summary graphically. This type of graph is called a box with a mustache.

Rice. 8. Schematic diagram or mustache box

Unfortunately, Excel typically builds stock charts based on only three or four values (Figure 9; see how to get around this limitation). To build a 5-digit summary, you can use the R statistical package (Figure 10; see Basic R graphics capabilities: scatter plots for details; if you are not familiar with R, you can start with). The boxplot() function in R, in addition to 5 numbers, also reflects outliers (about them a little later).

Rice. 9. Possible types of stock charts in Excel

Rice. 10. Boxplot in R; to build such a graph, it is enough to execute the command boxplot (count ~ spray, data = InsectSprays), the data stored in the program will be loaded, and the presented graph will be built

When constructing a box and whisker diagram, we will adhere to the following simple scheme:

- "C-width" = difference between the values of the two folds;

- "step" - a value one and a half times greater than the C-width;

- "internal barriers" are outside the folds at a distance of one step;

- "external barriers" - from the outside one step further than the internal ones;

- the values between the inner and adjacent outer barriers will be "outer";

- the values behind the outer barriers will be called “bouncing” (or outliers);

- "range" = difference between extreme values.

Rice. 19. Calculation of the moving median: (a) in detail for a part of the data; (b) for the entire sample

Rice. 20. Smooth curve

Chapter 10. USING TWO-WAY ANALYSIS

It is time to consider two-way analysis, both because of its importance and because it is an introduction to a variety of research methods. At the heart of the two-factor table (the "response" table) are:

- one type of response;

- two factors - and each of them is manifested in each observation.

Two-factor table of residuals. Row-plus-column analysis. On fig. Figure 21 shows average monthly temperatures for three locations in Arizona.

Rice. 21. Average monthly temperatures in three Arizona cities, °F

Let's determine the median for each place, and subtract it from the individual values (Fig. 22).

Rice. 22. Approximation values (medians) for each city and residuals

Now let's determine the approximation (median) for each row, and subtract it from the row values (Fig. 23).

Rice. 23. Approximation values (medians) for each month and residuals

For fig. 23 we introduce the concept of "effect". The number -24.7 is the column effect and the number 19.1 is the row effect. The effect shows how a factor or set of factors manifests itself in each of the observed values. If the emerging part of the factor is larger than what remains, then it is easier to see and understand what is happening with the data. The number that was subtracted from all data without exception (here 70.8) is called the "total". It is the manifestation of all the factors common to all data. Thus, for the quantities in Fig. 23 the formula is valid:

This is the pattern of concrete row-PLUS-column analysis. We return to our old trick of trying to find a simple partial description - a partial description that is easier to understand - a partial description whose subtraction will give us a deeper look at what has not yet been described.

What can we learn from full two-way analysis? The largest residual, 1.9, is small compared to the magnitude of the point-to-point and month-to-month effect change. Flagstaff is about 25°F cooler than Phoenix, while Yuma is 5-6°F warmer than Phoenix. The sequence of effects of the months decreases monotonously from month to month, slowly at first, then rapidly, then slowly again. This is similar to the symmetry around October (I observed this pattern earlier in the example of the length of the day; see . - Note. Baguzina); We removed both veils - the effect of the season and the effect of the place. After that, we were able to see quite a lot of things that had previously gone unnoticed.

On fig. 24 is given two factor chart. Although the main thing in this figure is an approximation, we should not neglect the residuals. At four points, we drew short vertical lines. The lengths of these dashes are equal to the values of the corresponding residues, so that the coordinates of the second ends are not approximation values, but

Data = approximation PLUS remainder.

Rice. 24. Two-factor chart

Note also that the property of this or any other two-factor chart is "scale in one direction only", defining the vertical size, i.e. dotted horizontal lines drawn along the sides of the picture, and the absence of any size in the horizontal direction.

For Excel features, see . It is curious that some of the formulas used in this note are named after Tukey.

What happened next, in my opinion, became quite complicated ...

Answer:

Using graphical methods, you can find dependencies, trends and offsets "hidden" in unstructured data sets.

Visualization methods include:

Representation of data in the form of columnar, line charts in multidimensional space;

Overlay and merge multiple images;

Identification and labeling of subgroups of data that meet certain conditions;

Splitting or merging subgroups of data on a chart;

Data aggregation;

Data smoothing;

Construction of pictograms;

Creation of mosaic structures;

Spectral planes, level line maps; methods of dynamic rotation and dynamic layering of three-dimensional images; selection of certain sets and blocks of data, etc.

Graph types in Statistica:

§ two-dimensional graphs; (histograms)

§ three-dimensional graphs;

§ matrix graphs;

§ pictographs.

Answer:These plots are sets of 2D, 3D, ternary, or n-dimensional plots (such as histograms, scatter plots, line plots, surfaces, pie charts), one plot for each selected category (subset) of observations.

The graph is a set of graphs, pie charts for each defined category of the selected variable (2 genders - 2 genders).

The categorized data structure can be treated in a similar way. : for example, statistics on buyers have been accumulated and it is necessary to analyze the purchase amount for various categories (men-women, old people-mature-youth).

In statistics - histograms, scatter plots, line plots, pie charts, 3D plots, 3D ternary plots

As you can see, this variable generally has a normal distribution for each group (color type).

5. What information about the nature of the data can be obtained from the analysis of scatterplots and categorized scatterplots?

Answer:

Scatter plots are commonly used to reveal the nature of the relationship between two variables (for example, profit and payroll) because they provide much more information than the correlation coefficient.

If it is assumed that one of the parameters depends on the other, then usually the values of the independent parameter are plotted along the horizontal axis, and the values of the dependent parameter are plotted along the vertical axis. Scatterplots are used to show the presence or absence of a correlation between two variables.

Each point marked on the diagram includes two characteristics, such as the age and income of the individual, each plotted on its own axis. Often this helps to find out if there is any significant statistical relationship between these characteristics and what type of function makes sense to select. BUT

6. What information about the nature of the data can be obtained from the analysis of histograms and categorized histograms?

Answer

: Histograms are used to study the frequency distributions of variable values. This frequency distribution indicates which specific values or ranges of values of the variable under study occur most frequently, how different these values are, whether most of the observations are located near the mean, is the distribution symmetric or asymmetric, multimodal (i.e. has two or more vertices), or unimodal, etc. Histograms are also used for comparisons of observed and theoretical or expected distributions.

Categorized histograms are sets of histograms corresponding to different values of one or more categorizing variables or sets of logical categorization conditions.

A histogram is a way of presenting statistical data in a graphical form - in the form of a bar chart. It displays the distribution of individual product or process parameter measurements. Sometimes it is called the frequency distribution, since the histogram shows the frequency of occurrence of the measured values of the object's parameters.

The height of each column indicates the frequency of occurrence of parameter values in the selected range, and the number of columns indicates the number of selected ranges.

An important advantage of the histogram is that it allows you to visualize the trends in the measured parameters of the quality of the object and visually evaluate the law of their distribution. In addition, the histogram makes it possible to quickly determine the center, spread and shape of the distribution of a random variable. A histogram is built, as a rule, for interval changes in the values of the measured parameter.

7. What is the fundamental difference between categorized plots and matrix plots in Statistica?

Answer:

Matrix plots also consist of multiple plots; however, here each of them is (or may be) based on the same set of observations, and plots are built for all combinations of variables from one or two lists.

matrix charts. Matrix plots show relationships between multiple variables in the form of a matrix of XY plots. The most common type of matrix plot is the scatterplot matrix, which can be thought of as the graphical equivalent of a correlation matrix.

Matrix Plots - Scatterplots. This type of matrix plot displays 2D scatter plots organized in the form of a matrix (variable values in a column are used as coordinates). X, and the values of the variable by string - as coordinates Y). Histograms depicting the distribution of each variable are located on the diagonal of the matrix (in the case of square matrices) or along the edges (in the case of rectangular matrices).

See also section Reducing the sample size.

Categorized plots require the same choice of variables as uncategorized plots of the corresponding type (for example, two variables for a scatterplot). At the same time, for categorized graphs, it is necessary to specify at least one grouping variable (or a way of dividing observations into categories), which would contain information about whether each observation belongs to a certain subgroup. The grouping variable will not be directly plotted on the graph (i.e., it will not be plotted), but it will serve as a criterion for dividing all analyzed observations into separate subgroups. For each group (category) defined by the grouping variable, one graph will be built.

8. What are the advantages and disadvantages of graphical methods for exploratory data analysis?

Answer:+ Visibility and simplicity.

Visibility (multidimensional graphical representation of data, by which the analyst himself identifies patterns and relationships between data).

- Methods give approximate values.

n - A high proportion of subjectivity in the interpretation of the results.

n Lack of analytical models.

9. What analytical methods of primary exploratory data analysis do you know?

Answer:Statistical methods, neural networks.

10. How to test the hypothesis about the agreement of the distribution of sample data with the normal distribution model in the Statistica system?

Answer:The x 2 (chi-square) distribution with n degrees of freedom is the distribution of the sum of squares of n independent standard normal random variables.

Chi-square is a measure of difference. Set the error level to a=0.05. Accordingly, if the value p>a , then the distribution is optimal.

- to test the hypothesis about the agreement of the distribution of sample data with the normal distribution model using the chi-square test, select the Statistics/Distribution Fittings menu item. Then, in the Fitting Contentious Distribution dialog box, set the type of theoretical distribution - Normal, select the variable - Variables, set the analysis parameters - Parameters.

11. What are the main statistical characteristics of quantitative variables do you know? Their description and interpretation in terms of the problem being solved.

Answer:Main statistical characteristics of quantitative variables:

mathematical expectation (average among the sample, sum of values\n , average output among enterprises)

median(middle of values.)

standard deviation (Square root of variance)

variance (a measure of the spread of a given random variable, i.e. its deviation from the mathematical expectation)

asymmetry coefficient (We determine the displacement relative to the center of symmetry according to the rule: if B1>0, then the displacement to the left, otherwise - to the right.)

kurtosis coefficient (close to normal distribution)

minimum sample value, maximum sample value,

scatter

sample upper and lower quartiles

Mode (peak value)

12. What measures of communication are used to measure the degree of closeness of the relationship between quantitative and ordinal variables? Their calculation in Statistica and interpretation.

Answer:Correlation is a statistical relationship between two or more random variables.

In this case, changes in one or more of these quantities lead to a systematic change in the other or other quantities. Correlation coefficient serves as a measure of correlation between two random variables.

Quantitative:

The correlation coefficient is an indicator of the nature of the change in two random variables.

Pearson's correlation coefficient (measures the degree of linear relationships between variables. You can say that correlation determines the degree to which the values of two variables are proportional to each other.)

Partial correlation coefficient (measures the degree of tightness between variables, provided that the values of other variables are fixed at a constant level).

Quality:

Spearman's rank correlation coefficient (used for the purpose of statistically studying the relationship between phenomena. The objects under study are ordered in relation to some attribute, i.e. they are assigned serial numbers - ranks.)

| | | next lecture ==> | |

1. The concept of data mining. Data mining methods.

Answer:Data mining is the identification of hidden patterns or relationships between variables in large arrays of raw data. As a rule, it is subdivided into tasks of classification, modeling and forecasting. The process of automatically searching for patterns in large datasets. The term Data Mining was introduced by Grigory Pyatetsky-Shapiro in 1989.

2. The concept of exploratory data analysis. What is the difference between the Data Mining procedure and the methods of classical statistical data analysis?

Answer:Exploratory data analysis (EDA) is used to find systematic relationships between variables in situations where there are no (or insufficient) a priori ideas about the nature of these relationships.

Traditional methods of data analysis are mainly focused on testing pre-formulated hypotheses and on "rough" exploratory analysis, while one of the main provisions of Data Mining is the search for non-obvious patterns.

3. Methods of graphical exploratory data analysis. Statistica tools for graphical exploratory data analysis.

Answer:Using graphical methods, you can find dependencies, trends and offsets "hidden" in unstructured data sets.

Statistica tools for graphical exploration analysis: categorized radial charts, histograms (2D and 3D).

Answer:These plots are sets of 2D, 3D, ternary, or n-dimensional plots (such as histograms, scatter plots, line plots, surfaces, pie charts), one plot for each selected category (subset) of observations.

5. What information about the nature of the data can be obtained from the analysis of scatterplots and categorized scatterplots?

Answer:Scatter plots are commonly used to reveal the nature of the relationship between two variables (for example, profit and payroll) because they provide much more information than the correlation coefficient.

6. What information about the nature of the data can be obtained from the analysis of histograms and categorized histograms?

Answer:Histograms are used to study the frequency distributions of variable values. This frequency distribution indicates which specific values or ranges of values of the variable under study occur most frequently, how different these values are, whether most of the observations are located near the mean, is the distribution symmetric or asymmetric, multimodal (i.e. has two or more vertices), or unimodal, etc. Histograms are also used to compare observed and theoretical or expected distributions.

Categorized histograms are sets of histograms corresponding to different values of one or more categorizing variables or sets of logical categorization conditions.

7. What is the fundamental difference between categorized plots and matrix plots in Statistica?

Answer:Matrix plots also consist of multiple plots; however, here each of them is (or may be) based on the same set of observations, and plots are built for all combinations of variables from one or two lists. Categorized plots require the same choice of variables as uncategorized plots of the corresponding type (for example, two variables for a scatterplot). At the same time, for categorized graphs, it is necessary to specify at least one grouping variable (or a way of dividing observations into categories), which would contain information about whether each observation belongs to a certain subgroup. The grouping variable will not be directly plotted on the graph (i.e., it will not be plotted), but it will serve as a criterion for dividing all analyzed observations into separate subgroups. For each group (category) defined by the grouping variable, one graph will be built.

8. What are the advantages and disadvantages of graphical methods for exploratory data analysis?

Answer:+ Visibility and simplicity.

- Methods give approximate values.

9. What analytical methods of primary exploratory data analysis do you know?

Answer:Statistical methods, neural networks.

10. How to test the hypothesis about the agreement of the distribution of sample data with the normal distribution model in the Statistica system?

Answer:The x 2 (chi-square) distribution with n degrees of freedom is the distribution of the sum of squares of n independent standard normal random variables.

Chi-square is a measure of difference. Set the error level to a=0.05. Accordingly, if the value p>a , then the distribution is optimal.

- to test the hypothesis about the agreement of the distribution of sample data with the normal distribution model using the chi-square test, select the Statistics/Distribution Fittings menu item. Then, in the Fitting Contentious Distribution dialog box, set the type of theoretical distribution - Normal, select the variable - Variables, set the analysis parameters - Parameters.

11. What are the main statistical characteristics of quantitative variables do you know? Their description and interpretation in terms of the problem being solved.

Answer:Main statistical characteristics of quantitative variables:

mathematical expectation (average output among enterprises)

median

standard deviation (Square root of variance)

variance (a measure of the spread of a given random variable, i.e. its deviation from the mathematical expectation)

asymmetry coefficient (We determine the displacement relative to the center of symmetry according to the rule: if B1>0, then the displacement to the left, otherwise - to the right.)

kurtosis coefficient (close to normal distribution)

minimum sample value, maximum sample value,

scatter

Partial correlation coefficient (measures the degree of tightness between variables, provided that the values of other variables are fixed at a constant level).

Quality:

Spearman's rank correlation coefficient (used for the purpose of statistically studying the relationship between phenomena. The objects under study are ordered in relation to some attribute, i.e. they are assigned serial numbers - ranks.)

Literature

1. Aivazyan S.A., Enyukov I.S., Meshalkin L.D. Applied Statistics: Fundamentals of Modeling and Primary Data Processing. - M.: "Finance and statistics", 1983. - 471 p.

2. Borovikov V.P. statistics. The art of computer data analysis: For professionals. 2nd ed. - St. Petersburg: Peter, 2003. - 688 p.

3. Borovikov V.P., Borovikov I.P. Statistica - Statistical analysis and data processing in the Windows environment. - M.: "Filin", 1997. - 608 p.

4. Electronic textbook StatSoft on data analysis.

Updated 07/29/2008

My rather chaotic thoughts on the application of statistical methods in the processing of proteomic data.

APPLICATIONS OF STATISTICS IN PROTEOMICS

Review of methods for the analysis of experimental data

Pyatnitsky M.A.

State Research Institute of Biomedical Chemistry. V.N. Orekhovich RAMS

119121, Moscow, Pogodinskaya st. d.10,

email: mpyat @bioinformatics.en

Proteomic experiments require careful thoughtful statistical processing of the results. There are several important features that characterize proteomic data:

- there are a lot of variables

- complex relationships between these variables. These relationships are assumed to reflect biological facts.

- the number of variables is much greater than the number of samples. This makes it very difficult for many statistical methods to work.

However, similar features are also inherent in many other data obtained using high-throughput technologies.

Typical tasks of a proteomic experiment are:

- comparison of protein expression profiles between different groups (eg cancer/normal). Usually the task is to construct a decision rule that allows one to separate one group from another. Variables with the highest discriminatory ability (biomarkers) are also of interest.

- study of relationships between proteins.

Here I will focus mainly on the application of statistics to the analysis of mass spectra. However, much of what has been said also applies to other types of experimental data. Here, the methods themselves are almost not considered (with the exception of a more detailed description of ROC curves), but rather, an arsenal of methods for data analysis is very briefly outlined and outlines are given for its meaningful application.

Exploratory Analysis

The most important step in working with any data set is exploratory data analysis (EDA). In my opinion, this is perhaps the most important point in the statistical processing of data. It is at this stage that you need to get an idea of the data, understand what methods are best to apply and, more importantly, what results can be expected. Otherwise, it will be a blind game (and let's try such and such a method), a senseless enumeration of the arsenal of statistics, data dredging. Statistics are dangerous because they will always give some kind of result. Now, when launching the most complex computational method requires only a couple of mouse clicks, this is especially true.

According to Tukey, the goals of exploratory analysis are:

- maximize insight into a data set;

- uncover underlying structure;

- extract important variables;

- detect outliers and anomalies;

- test underlying assumptions;

- develop parsimonious models; and

- determine optimal factor settings.

At this stage, it is wise to get as much information about the data as possible, using primarily graphical tools. Plot histograms for each variable. As trite as it sounds, take a look at the descriptive statistics. It is useful to look at scatter plots (while drawing dots with different symbols indicating belonging to classes). interesting to look at the results PCA (principal component analysis) and MDS(multidimensional scaling). So, EDA is primarily a broad application of graphic visualization.

It is promising to use projection pursuit methods to find the most “interesting” data projection. Usually, some degree of automation of this work is possible (GGobi ). It is arbitrary to choose an index to look for interesting projections.

Normalization

Usually, the data is not normally distributed, which is not convenient for statistical procedures. Log-normal distribution is common. A simple logarithm can make the distribution much more pleasant. In general, do not underestimate such simple methods as the logarithm and other data transformations. In practice, there are many cases when, after taking a logarithm, meaningful results begin to be obtained, although before preprocessing the results were of little content (here is an example about the mass spectrometry of wines).

In general, the choice of normalization is a separate problem, to which many works are devoted. The choice of preprocessing and scaling method can significantly affect the results of the analysis (Berg et al, 2006). In my opinion, it is always better to carry out the simplest normalization by default (for example, if the distribution is symmetric or logarithm otherwise), than not to use these methods at all.

Here are some examples of graphical visualization and application of simple statistical methods for exploratory data analysis.

Examples

The following are examples of graphs that might make sense to build for each variable. On the left are distribution density estimates for each of the two classes (red - cancer, blue - control). Please note that under the graphs, the values themselves are also presented, according to which the density estimate is built. The ROC curve is shown on the right and the area under it is shown. Thus, one can immediately see the potential of each variable as a discriminator between classes. After all, it is discrimination between classes that is usually the ultimate goal of statistical analysis of proteomic data.

The following figure shows an illustration of normalization: a typical peak intensity distribution in a mass spectrum (left) when taken logarithmically gives a distribution close to normal (right).

Next, we will show the use of heatmap for exploratory data analysis. Columns - patients, rows - genes. The color shows the numerical value. A clear division into several groups is seen. This is a great example of an EDA application that gives you an immediate picture of the data.

The following picture shows an example of a gel-view graph. This is a standard technique for visualizing a large set of spectra. Each row is a sample, each column is a peak. The intensity of the value is coded by color (the brighter the better). Such pictures can be obtained, for example, in ClinProTools. But there is a big drawback - the lines (samples) go in the order in which they were loaded. It is much more correct to rearrange lines (samples) in such a way that close samples are located side by side and on the chart. In fact, this is a heatmap without sorting columns and dendrograms on the sides.

The following picture shows an example of applying multidimensional scaling. Circles - control, triangles - cancer. It can be seen that the cancer has a significantly larger variance and the construction of a decision rule is quite possible. Such a curious result is achieved only for the first two coordinates! Looking at such a picture, one can be filled with optimism regarding the results of further data processing.

Missing value problem

The next problem that the researcher faces is the problem of missing values. Again, there are many books devoted to this topic, each of which describes dozens of ways to solve this problem. Missing values are often found in data generated by high-throughput experiments. Many statistical methods require complete data.

Here are the main ways to solve the problem of missing values:

. remove rows/columns with missing values. Justified if there are relatively few missing values, otherwise you will have to remove everything

. generate new data to replace the missing ones (replace with the average, get from the estimated distribution)

. use methods that are insensitive to missing data

. experiment again!

Emission problem

An outlier is a sample with sharply different indicators from the main group. Again, this topic is deeply and extensively developed in the relevant literature.

What is the risk of emissions? First of all, this can significantly affect the work of non-robust (not resistant to outliers) statistical procedures. The presence of even one outlier in the data can significantly change the mean and variance estimates.

Outliers are difficult to see in multivariate data, since they can appear only in the values of one or two variables (let me remind you that in a typical case, a proteomic experiment is described by hundreds of variables). This is where analyzing each variable separately comes in handy - when looking at descriptive statistics or histograms (like those given above), such an outlier is easy to detect.

There are two strategies for looking for outliers:

1) manually - scatter plot analysis, PCA, and other exploratory analysis methods. Try to build a dendrogram - on it the outlier will be visible in the form of a separate branch that departs from the root early.

2) 2) developed many criteria for detection (Yang , Mardia , Schjwager ,…)

Emission Control

. removal of outliers

. apply outlier-resistant (robust) statistical methods

At the same time, you need to keep in mind that an outlier is possible - this is not an experimental error, but some essentially new biological fact. Although this, of course, happens extremely rarely, but still ...

The following figure shows the possible types of outliers by the type of their impact on statistics.

Let us illustrate how outliers affect the behavior of correlation coefficients.

We are interested in the case (f ) . It can be seen that the presence of only 3 outliers gives the value of the Pearson correlation coefficient equal to 0.68, while the Spearman and Kendall coefficients give much more reasonable estimates (no correlation). That's right, Pearson's correlation coefficient is a non-robust statistic.

We will show the application of the PCA method for the visual detection of outliers.

Of course, you should not always rely on such “artisanal” detection methods. It is better to turn to the literature.

Classification and dimensionality reduction

Usually, the main goal of analyzing proteomic data is to construct a decision rule for separating one sample group from another (eg, cancer/normal). After conducting exploratory analysis and normalization, the next step is usually to reduce the dimension of the feature space ( dimensionality reduction ).

Selection of variables

A large number of variables (and this is a standard situation in proteomic experiments):

. complicates data analysis

. usually not all variables have a biological interpretation

. often the goal of the work is to select “interesting” variables (biomarkers)

. degrades the performance of classification algorithms. Because of this - retraining (overfitting).

Therefore, a standard step is to apply dimensionality reduction before classifying

Dimensionality reduction methods can be divided into 2 types:

1) filter

The tasks of this group of methods are either the removal of already existing "uninteresting" variables, or the creation of new variables as linear combinations of old ones. These include

PCA, MDS,

methods of information theory, etc.

Another idea is directed selection of “interesting variables”: for example, bimodal variables are always interesting to look at (ideally, each peak corresponds to a different class for binary classification). However, this can be attributed to exploratory analysis.

Another approach is to eliminate highly correlated variables. In this approach, variables are grouped using correlation coefficients as a measure of distance. You can use not only the Pearson correlation, but also other coefficients. From each cluster of correlated variables, only one is left (for example, according to the criterion of the largest area under ROC-curve).

The figure shows an example of visualization of such a cluster analysis of peaks using heatmap . The matrix is symmetrical, the color shows the values of the Pearson correlation coefficient (blue - high correlation values, red - low values). Several clusters of highly dependent variables are clearly distinguished.

2) Wrapper

Here classification algorithms are used as a measure of the quality of a set of selected variables. The optimal solution is a complete enumeration of all combinations of variables, since with complex relationships between variables

Situations are quite possible when two variables separately which are not discriminatory become so when a third is added. Obviously, exhaustive enumeration is computationally impossible for any significant number of variables.

An attempt to overcome this "curse of dimensionality" is the use of genetic algorithms to find the optimal set of variables. Another strategy is to include/exclude variables one at a time while controlling the value of Akaike Information Criteria or Bayes Information Criteria .

For this group of methods, cross-validation is mandatory. More about this is written in the section on comparing classifiers.

Classification

The task is to construct a decision rule that will allow the newly processed sample to be assigned to one class or another.

Learning without a teacher- cluster analysis. This is a search for the best (in a sense) groupings of objects. Unfortunately, you usually need to specify the number of clusters a priori or choose a cutoff threshold (for hierarchical clustering). It always introduces an unpleasant arbitrariness.

Learning with a teacher: neural networks, SVM, decision trees, …

A large sample with pre-classified objects is required.

Usually works better than unsupervised learning. Cross-validation - in the absence of a test sample. There is an overfitting problem

An important and simple test that is rarely performed is running a trained classifier on random data. Generate a matrix with a size equal to the size of the original sample, fill it with random noise or a normal distribution, perform all techniques, including normalization, selection of variables and training. In the event that reasonable results are obtained (that is, you have learned to recognize random noise), there will be less reason to believe in the constructed classifier.

There is an easier way - just randomly change the class labels for each object without touching the rest of the variables. This again results in a meaningless data set, on which it is worth running the classifier.

It seems to me that the constructed classifier can be trusted only if at least one of the above tests for recognition of random data has been performed.

ROC-curve

Receiver-Operating Characteristic curve

. Used to present the results of a classification into 2 classes, provided that the answer is known, i.e. the correct partition is known.

. It is assumed that the classifier has a parameter (cutoff point), varying which one or another division into two classes is obtained.

This determines the proportion of false positive (FP) and false negative results (FN). Sensitivity and specificity are calculated, a graph is plotted in coordinates (1-specificity, sensitivity). When varying the classifier parameter, different values of FP and FN are obtained, and the point moves along the ROC curve.

. Accuracy = (TP +TN ) / (TP +FP +FN +TN )

. Sensitivity = TP / TP+FN

. Specificity = TN / TN+FP

What is a “positive” event depends on the conditions of the problem. If the probability of the presence of a disease is predicted, then a positive outcome is the class “sick patient”, a negative outcome is the class “healthy patient”

The most descriptive explanation (with excellent java applets illustrating the essence of the ROC idea) I have seen at http://www.anaesthetist.com/mnm/stats/roc/Findex.htm

ROC curve:

. It is convenient to use for the analysis of the comparative efficiency of two classifiers.

. The closer the curve is to the upper left corner, the more predictive the model is.

. The diagonal line corresponds to the “useless classifier”, i.e. complete indistinguishability of classes

. Visual comparison does not always allow you to accurately assess which classifier is preferable.

. AUC - Area Under Curve - a numerical estimate that allows you to compare ROC curves.

. Values from 0 to 1.

Comparison of two ROC curves

Area under the curve (AUC) as a measure for comparing classifiers.

Other examples of ROC curves are given in the section on exploratory analysis.

Comparative analysis of classifiers

There are many options in the application of pattern recognition methods. An important task is to compare different approaches and choose the best one.

The most common way to compare classifiers in articles on proteomics (and not only) today is cross-validation. In my opinion, there is little point in applying the cross-validation procedure once. A smarter approach is to run cross-validation multiple times (ideally, the more the better) and build confidence intervals to estimate classification accuracy. The presence of confidence intervals makes it possible to reasonably decide whether, for example, an improvement in the quality of classification by 0.5% is statistically significant or not. Unfortunately, only a small number of studies provide confidence intervals for accuracy, sensitivity, and specificity. For this reason, the figures given in other works are difficult to compare with each other, since the range of possible values is not indicated.

Another issue is the choice of the type of cross-validation. I prefer 10-fold or 5-fold cross-validation instead of leave -one -out .

Of course, using cross-validation is an “act of desperation”. Ideally, the sample should be divided into 3 parts: the model is built on the first part, the parameters of this model are optimized on the second part, and verification is performed on the third part. Cross-validation is an attempt to avoid these constructs, and is only justified with a small number of samples.

Other useful information can be gleaned from numerous runs of the cross-validation procedure. For example, it is interesting to see on which objects the recognition procedure fails more often. Perhaps these are errors in the data, outliers, or other interesting cases. By examining the characteristic properties of these objects, it is sometimes possible to understand in which direction it is worth improving your classification procedure.

Below is a classifier comparison table for Moshkovskii et al, 2007. SVM and logistic regression (LR) were used as classifiers. Feature selection methods were RFE (Re cursive Feature Elimination) and Top Scoring Pairs(TSP). The use of confidence intervals makes it possible to reasonably judge the significant advantages of various classification schemes.

Literature

Here are some books and articles that may be useful in the analysis of proteomic data.

C. Bishop, Neural Networks for Pattern Recognition

* Berrar, Dubitzky, Granzow. Practical approach to microarray data analysis (Kluwer, 2003). The book is about microarray processing (although I wouldn't recommend it as an introduction to the subject), but there are a couple of interesting chapters as well. The illustration with the effect of outliers on the correlation coefficients is taken from there.

Literature marked with * is in electronic form, and the author shares it free of charge (i.e. for free)

), etc. Moreover, the advent of fast modern computers and free software (like R) has made all these computationally demanding methods available to almost every researcher. However, this accessibility further exacerbates a well-known problem with all statistical methods, often described in English as " rubbish in, rubbish out", i.e. "garbage at the entrance - garbage at the exit". Here we are talking about the following: miracles do not happen, and if we do not pay due attention to how this or that method works and what requirements it imposes on the analyzed data, then the results obtained with its help cannot be taken seriously. Therefore, each time the researcher should begin his work by carefully familiarizing himself with the properties of the data obtained and checking the necessary conditions for the applicability of the relevant statistical methods. This initial stage of the analysis is called exploration(Exploratory Data Analysis).

In the literature on statistics, one can find many recommendations for performing exploratory data analysis (EDA). Two years ago in a magazine Methods in Ecology and Evolution An excellent article was published in which these recommendations are summarized in a single protocol for the implementation of the RDA: Zuur A. F., Ieno E. N., Elphick C. S. (2010) A protocol for data exploration to avoid common statistical problems. Methods in Ecology and Evolution 1(1): 3-14. Despite the fact that the article is written for biologists (in particular, for ecologists), the principles outlined in it are certainly true in relation to other scientific disciplines. In this and subsequent blog posts, I will provide excerpts from the work Zuur et al.(2010) and describe the RDA protocol proposed by the authors. Just as it was done in the original article, the description of the individual steps of the protocol will be accompanied by brief recommendations on the use of the corresponding functions and packages of the R system.

The proposed protocol includes the following main elements:

- Formulation of the research hypothesis. Perform experiments/observations to collect data.

- Exploratory Data Analysis:

- Identification of points-outliers

- Checking the Homogeneity of Dispersions

- Checking the Normality of Data Distribution

- Detection of an excess number of zero values

- Detection of collinear variables

- Identification of the nature of the relationship between the analyzed variables

- Identifying interactions between predictor variables

- Identification of spatio-temporal correlations among the values of the dependent variable

- Application of a statistical method (model) appropriate to the situation.

Zuur et al.(2010) note that RDA is most effective when using a variety of graphical tools, since graphs often provide a better understanding of the structure and properties of the analyzed data than formal statistical tests.

Consideration of the above RDA protocol will begin with outlier detection. The sensitivity of different statistical methods to the presence of outliers in the data varies. Thus, when using a generalized linear model to analyze a dependent variable distributed according to Poisson's law (for example, the number of cases of a certain disease in different cities), the presence of outliers can cause excessive dispersion, which will make the model inapplicable. At the same time, when using non-parametric multidimensional scaling based on the Jaccard index, all initial data are converted to a nominal scale with two values (1/0), and the presence of outliers does not affect the analysis result. The researcher must clearly understand these differences between different methods and, if necessary, check for outliers in the data. Let's give a working definition: by "outlier" we mean an observation that is "too" large or "too" small compared to most of the other available observations.

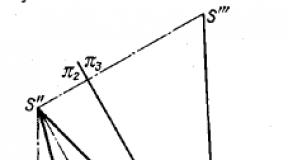

Typically, outliers are detected using range charts. R uses robust (robust) estimates of central trend (median) and scatter (interquartile range, IFR) to plot range charts. The upper "whisker" extends from the upper boundary of the "box" to the largest sample value within 1.5 x RBI of that boundary. Likewise, the bottom "whisker" extends from the bottom of the "box" to the smallest sample value within 1.5 x RFI of that box. Observations outside the "whiskers" are considered as potential outliers (Figure 1).

| Figure 1. The structure of the range diagram. |

Examples of functions from R that are used to plot range diagrams:

- Basic function boxplot() (see details).

- Package ggplot2 : geometry object (" geom") boxplot . For example:

p<- ggplot (mtcars, aes(factor(cyl), mpg)) p + geom_boxplot() # или: qplot (factor(cyl), mpg, data = mtcars, geom = "boxplot" )

Figure 2. Cleveland scatterplot showing wing length data for 1295 sparrows (Zuur et al. 2010). In this example, the data has previously been sorted according to the weight of the birds, so the point cloud is roughly S-shaped.

In Figure 2, a point corresponding to a wing length of 68 mm is clearly distinguished. However, this wing length should not be considered an outlier, as it differs only slightly from other lengths. This point stands out from the general background only because the initial values of the wing length were ordered by the weight of the birds. Accordingly, the outlier is more likely to be sought among the weight values (i.e., a very high value of the wing length (68 mm) was noted in an unusually small sparrow weighing for this).

Up to this point, we have called an "outlier" an observation that is "significantly" different from most of the other observations in the population under study. However, a more rigorous approach to identifying outliers is to assess how these unusual observations affect the results of the analysis. In doing so, a distinction should be made between unusual observations for dependent and independent variables (predictors). For example, when studying the dependence of the abundance of any biological species on temperature, most temperature values \u200b\u200bmay lie in the range from 15 to 20 ° C, and only one value may be equal to 25 ° C. Such a plan of experiment, to put it mildly, is not ideal, since the temperature range from 20 to 25 °C will be explored unevenly. However, in real field studies, the opportunity to perform measurements for high temperature may only present itself once. What, then, to do with this unusual measurement made at 25°C? With a large volume of observations, such rare observations can be excluded from the analysis. However, with a relatively small amount of data, an even greater decrease in it may be undesirable from the point of view of the statistical significance of the results obtained. If the removal of unusual predictor values for one reason or another is not possible, a certain transformation of this predictor (for example, taking a logarithm) can help.

It is more difficult to "fight" with unusual values of the dependent variable, especially when building regression models. Transformation by, for example, taking a logarithm can help, but since the dependent variable is of particular interest in building regression models, it is better to try to find an analysis method that is based on a probability distribution that allows a greater spread of values for large averages (for example, a gamma distribution for continuous variables or the Poisson distribution for discrete quantitative variables). This approach will allow you to work with the original values of the dependent variable.

Ultimately, the decision to remove outliers from the analysis rests with the researcher. However, he must remember that the reasons for the occurrence of such observations may be different. Thus, the removal of outliers that have arisen due to unsuccessful design of the experiment (see the temperature example above) can be quite justified. It would also be justified to remove outliers that clearly arose due to measurement errors. At the same time, unusual observations among the values of the dependent variable may require a more subtle approach, especially if they reflect the natural variability of that variable. In this regard, it is important to keep detailed documentation of the conditions under which the experimental part of the study takes place - this can help interpret "outliers" during data analysis. Regardless of the reasons for the occurrence of unusual observations, in the final scientific report (for example, in an article) it is important to inform the reader both about the very fact of the discovery of such observations, and about the measures taken in relation to them.